All Choked Up

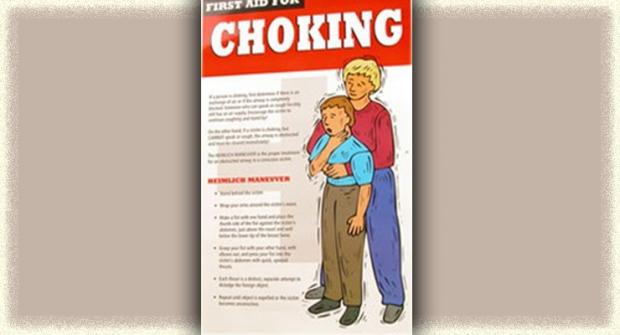

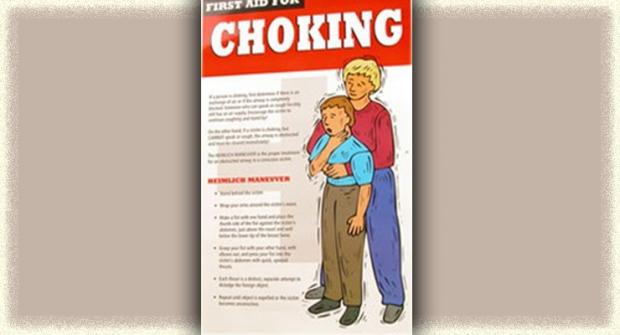

It’s not uncommon in ALS for something unexpectedly and abruptly to aggravate your airway and throw you into a choking fit. I know from personal experience that these choking, gagging, gasping spells are physically exhausting, frustrating and upsetting for both the choker and the caregiver.The actions to take for choking depend on the type of choking spell you’re having. For example: Read More

Recent ALSN Articles

Bats and Nuts Yield Environmental Clue to ALS on Guam

High rates of ALS on Guam may have been caused by the native people’s predilection for eating bats, according to a new theory.Two researchers proposed the theory based partly on observations that the bats — a delicacy among native Guamanians — eat poisonous nuts from the indigenous cycad tree."If we’re correct that an environmental toxin in the diet causes [ALS] among the people in Guam, it might lead to an investigation of environmental toxins — including dietary ones — elsewhere," said one of the researchers, Paul Alan Cox.

Read MoreTake Falls Seriously to Prevent Further Injuries

There was a period of time last year when Ira Anderson was "falling ridiculously all over the place." Reluctantly, he agreed to get a cane and found one with an ornate carved metal handle "that matched my personality."Unfortunately, the cane made a better fashion accessory than a fall-prevention device. An uneven surface or misjudged step still put Anderson on the ground.

Read MoreGulf War Vets More Likely to Get ALS

A government-supported research team announced in the Sept. 23 issue of the journal Neurology that having served in southwest Asia during the Gulf War significantly increases the risk of later developing ALS.Veterans of the Gulf War were found to be about twice as likely to develop ALS as were military personnel who didn’t go to the gulf during the same period. (This finding was released as a preliminary announcement late in 2001. The results are now fully analyzed and "official.")

Read More

All Choked Up

It’s not uncommon in ALS for something unexpectedly and abruptly to aggravate your airway and throw you into a choking fit. I know from personal experience that these choking, gagging, gasping spells are physically exhausting, frustrating and upsetting for both the choker and the caregiver.The actions to take for choking depend on the type of choking spell you’re having. For example:

Read MoreEquipment Corner September 2006

When it’s time for a gastrostomy, or feeding, tube, you must decide on the kind of tube, tube size, feeding delivery options, type of food, and how you’re going to affix a tube to your abdomen.Here are some helpful tips to consider when choosing the equipment that’s right for you.A PEG (percutaneous endoscopic gastrostomy) tube is the most common solution, as it allows you and your caregiver to administer nutrients directly to the stomach.

Read MoreRespite Care Benefits both Patient and Caregiver

Linda Parr had a hard time convincing her husband and caregiver Larry to take a little break and let someone provide respite care for her. Larry worried Linda wouldn’t get the quality of care he could provide. If she hadn’t pushed for it, he probably wouldn’t have done it.But Linda knew that using respite care was important to both of them. “When Larry was gone he was able to have a meal without having to help me. He had a good night’s sleep without my waking him up. It helped to give him more rest. Caregivers really need to get rest and relaxation.”

Read More

Talking to Your Kids About ALS

Aimee Chamernik, 37, of Grayslake, Ill., and (from left) sons Nick, 9; Zachary, 3; husband Jim and daughter Emily, 7. Chamernik writes about talking with her children about ALS. As we huddle on the couch, munching popcorn and engrossed in our movie, the kids and I are startled by a sharp knock at the door. Before I can shift forward to start the painstaking process of standing up, our neighbors burst into our house.

Read More

Helping Hands

"It is one of the most beautiful compensations of life that no man can sincerely try to help another without helping himself." — Ralph Waldo Emerson Caring for someone with ALS may be one of the most difficult things a person will ever do. The physical and emotional demands caregivers face can be brutal, and often may seem insurmountable.

Read MoreThe Strain of Silence

Ron Harrison, 72, of Lake St. Louis, Mo., joked recently about one of the hallmarks of ALS — the loss of speech.When his wife of 43 years, Darlene, and a family friend were unable to decipher what Ron was trying to share with them, he repeated it until, after numerous tries, it came out clearly.

Read MoreIn the Market for New Wheels?

Accessible public transit, such as taxis and buses with adaptive equipment, unfortunately cannot always be relied on to show up when needed, even in urban areas. That means personal acquisition of an adapted vehicle becomes a serious consideration for people using wheelchairs.Adapted vehicles come in a bewildering variety of shapes, sizes, configurations, capabilities and price ranges. Where to begin the selection process? Some basic issues need to be addressed.

Read MoreMDA Resource Center: We’re Here For You

Our trained specialists are here to provide one-on-one support for every part of your journey. Send a message below or call us at 1-833-ASK-MDA1 (1-833-275-6321). If you live outside the U.S., we may be able to connect you to muscular dystrophy groups in your area, but MDA services are only available in the U.S.